1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

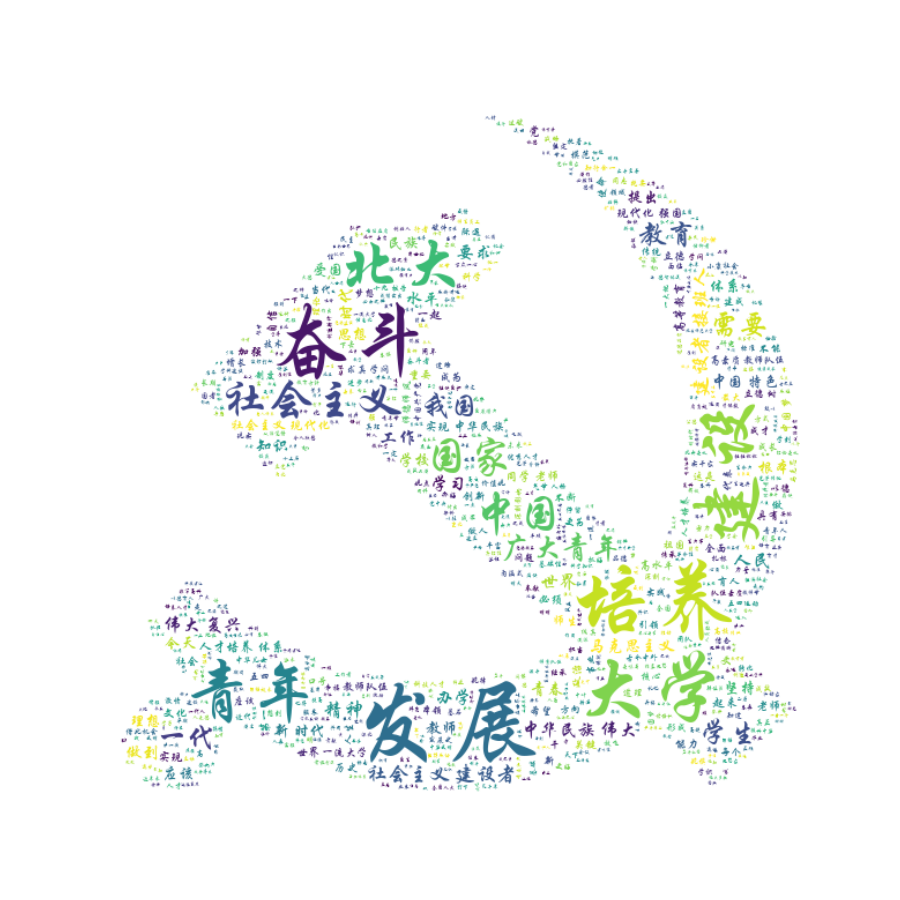

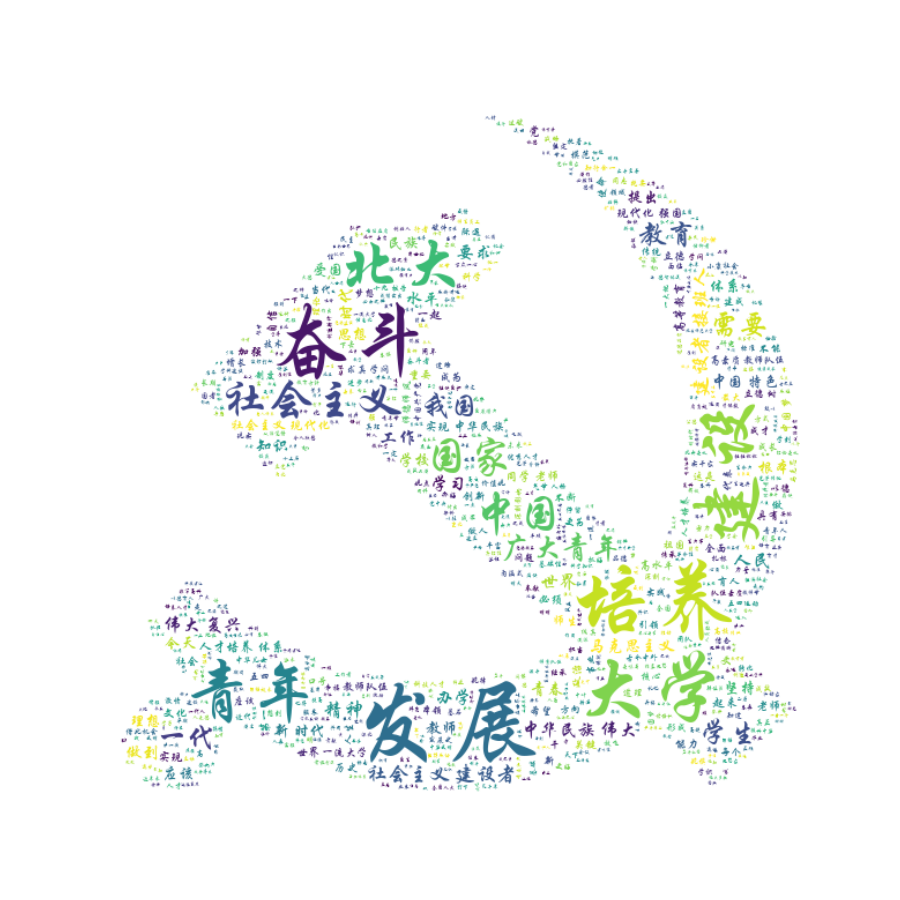

| from nltk import FreqDist

import jieba

from matplotlib import pyplot as plt

from wordcloud import WordCloud

import numpy as np

from PIL import Image

file_path = open('Report14-习近平在北京大学师生座谈会上的讲话.txt', encoding='utf-8')

file_data = file_path.read()

file_data = file_data.replace(' ', '')

cut_words = jieba.lcut(file_data)

file_path = open('停用词表.txt', encoding='utf-8')

stop_words = file_path.read()

new_data = []

for word in cut_words:

if word not in stop_words:

new_data.append(word)

freq_list = FreqDist(new_data)

print(new_data)

most_common_words = freq_list.most_common()

def makeImage(text):

alice_mask = np.array(Image.open("picture.jpg"))

plt.figure(figsize=(9, 9))

wc = WordCloud(font_path=font, background_color='white', max_words=1000, mask=alice_mask, width=900, height=900)

wc.generate(text)

plt.imshow(wc, interpolation="bilinear")

plt.axis("off")

plt.show()

font = r'C:\Windows\Fonts\STXINGKA.TTF'

makeImage(" ".join(new_data))

|